We can’t predict the future of cloud security, but one thing is certain – the cloud gave us three things that data centers never could – elasticity, scalability, and transformation.

Although all major public cloud providers like AWS, Azure, and GCP have improved their cloud security over the past decade, new trends pop up every year.

This guide will help you understand the most important concepts and the latest cloud security trends.

Let’s get started with the core concept of cloud security.

The Shared Responsibility Model – The Pillar of Cloud Security

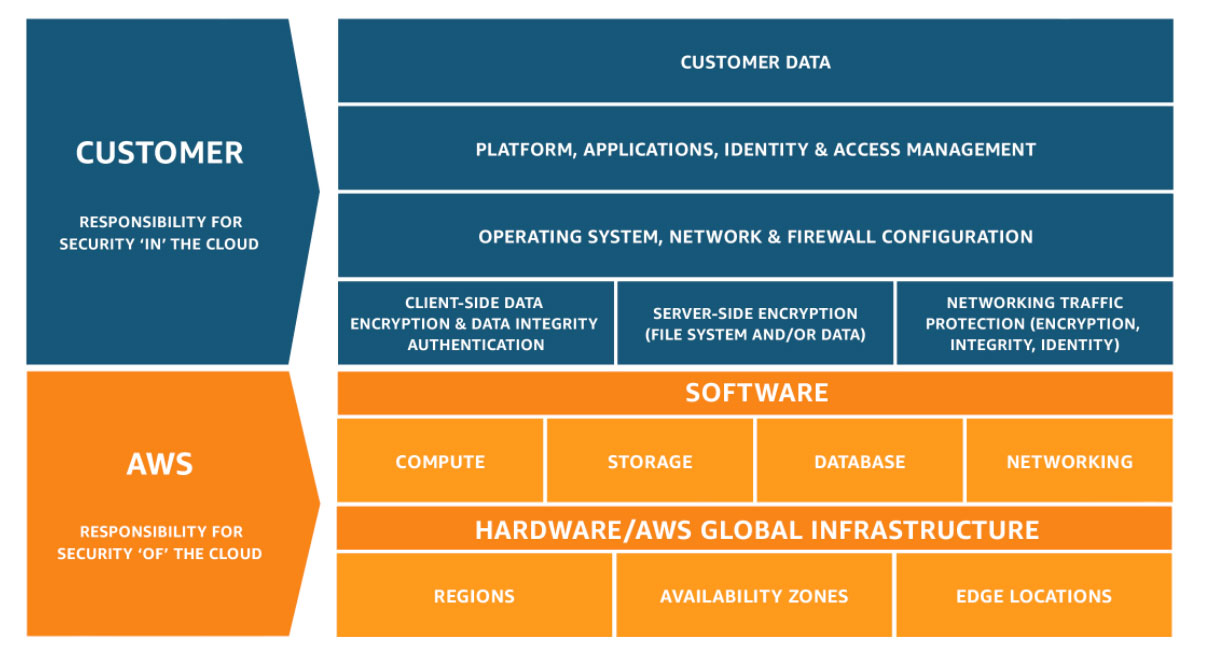

The shared responsibility model is a framework that defines the responsibilities of cloud service providers and their customers.

By migrating to the public cloud, you’re losing direct access to your servers, and, in some cases, you won’t even know the exact geographical location of your data.

This requires a fundamental shift in how an organization designs its IT infrastructure.

Just as you don’t have complete access to your resources, you’re also not fully responsible for your security.

This is the cornerstone of the shared responsibility model.

How Shared Responsibility Works on AWS

Here’s how Amazon defines the concept of shared responsibility:

As you can see, AWS manages the entire physical layer — data centers, which are divided into regions with availability zones and edge locations.

Customers pick the region and availability zone (AZ) where the data or service will reside, but they don’t have physical access to it, nor is the exact location ever disclosed to the public.

The client’s responsibility is to provision and maintain a desired operating system, to configure internal networking and firewall rules, and to ensure client-side data encryption.

Depending on the services used, responsibilities can be grouped into three categories:

- Infrastructure-as-a-Service (IaaS) — AWS provides the infrastructure up to the operating system layer, and customers are responsible for configuring and maintaining their OS, applications, and related components.

- Platform-as-a-Service (PaaS) — In this category, the OS is pre-configured, but customers must deploy and secure their applications. AWS BeanStalk and AWS EKS fall into this group.

- Software-as-a-Service (SaaS) — Fully managed by the cloud provider, customers mainly handle data import/export. AWS services like RDS, S3, and DynamoDB fall under this category.

Now that you understand the most important concept in cloud security, let’s take a look at the top trends in cloud security in recent years.

Top 8 Cloud Security Trends to Follow

1. DevOps and the rise of DevSecOps

If we asked you if you were utilizing the DevOps methodology in your business, your answer would probably be positive.

It’s been used for years in the tech industry. But the industry now requires a much higher emphasis on security.

The key to proper development cycles with top-notch security is to put the security development right in the middle of your DevOps process.

Some have coined it DevSecOps — Development, Security, Operations.

This process is designed to automatically integrate security at every phase of the software development lifecycle.

From the moment the git commit happens, there should be security mechanisms in all stages of your CI/CD pipelines, from build to test to deployment in all environments.

Scan your code, secure artifact repositories against unauthorized access and malware, employ immutable infrastructure and ensure every component is patched, minimally privileged, and regularly audited.

2. Virtual private cloud isn’t enough

Virtual private cloud (VPC) is a managed service that all public cloud providers offered years ago, and it’s a great way of isolating your cloud resources from public access and establishing proper network segmentation in the cloud.

But that’s the past, this kind of network security isn’t enough with today’s cloud security trends.

Companies are starting to utilize additional cloud services you should consider as an improvement to your network security.

Some of the most used ones are:

- Cloud-Based Firewall is a cloud security measure that protects your network by filtering incoming and outgoing traffic through a cloud infrastructure. They offer scalability, flexibility, and real-time threat detection, making them effective for safeguarding your network against various cyber threats and unauthorized access.

- WAF (Web Application Firewall) sits between a user’s device and a web server, inspecting and filtering incoming web traffic. WAFs analyze the traffic for suspicious or malicious activities and can block or allow it based on predefined security rules. By doing so, WAFs protect web applications from attacks like SQL injection, cross-site scripting, and other common web vulnerabilities, enhancing their overall security and reliability.

- DDoS Mitigation is a specialized security solution that helps protect your online services and websites from DDoS attacks. These services detect and mitigate the flood of malicious traffic generated by DDoS attacks, ensuring that your online resources remain accessible to legitimate users.

- VPC flow logs are AWS features that capture and log information about the network traffic flowing in and out of your Virtual Private Cloud. These logs record data such as source and destination IP addresses, ports, protocol, and data transfer rates. These flows are useful for monitoring and analyzing network traffic patterns and enhancing security by detecting and investigating unusual or unauthorized network activities.

3. SASE framework

With more and more software residing in the cloud, on-prem solutions are becoming obsolete, but what if you’re a huge digital enterprise? How can you manage cloud security without on-prem?

The Secure Access Service Edge (SASE) framework offers cloud security solutions for big digital enterprises by combining wide area networking (WAN) with multiple security features like anti-malware and security brokers.

The key components of SASE are:

- Cloud-native Security — SASE leverages the cloud to provide security services, including features like firewalls, secure web gateways, data loss prevention, and zero-trust network access, all delivered from the cloud.

- Identity-Centric Access — It focuses on user and device identity, ensuring that access controls are tailored to the specific needs and privileges of each user.

- SD-WAN Integration — SASE integrates Software-Defined Wide Area Networking (SD-WAN) capabilities, allowing organizations to optimize network performance while ensuring security across various locations and cloud services.

- Global Network Backbone — SASE providers typically operate a global network backbone, ensuring low latency and consistent performance for users worldwide.

- Scalability and Flexibility — It can adapt to the changing needs of businesses, making it well-suited for organizations with dynamic workforces and evolving network requirements.

4. Zero trust model

Most modern cloud security trends emphasize the Zero Trust principle in their approach to network security.

The Zero Trust model operates on the assumption that nothing can be trusted whether it’s inside or outside of an organization.

Every user and device must be authenticated and authorized before they are granted access to resources. Multi-factor authentication (MFA) is often used to add an extra layer of security.

When accessing the service, all users are granted only the minimum level of access and permissions required for their task.

Networks are segmented into smaller, isolated segments, and traffic between these segments is strictly controlled.

All of this leads to enhanced security as it’s a much more proactive approach than traditional cloud security methods.

With the increasing reliance on cloud services, Zero Trust has to be a priority for all organizations operating in the cloud.

5. Shift from instances to containers

Microservice architecture is highly popular in software development as it enables developers to release code in smaller, frequent increments and quickly observe the benefits.

It also allows companies to provide users with new versions of applications daily, making businesses oriented towards customers and their needs.

But to make it possible, we need technologies that empower developers to deliver software in a uniform, scalable, and fast manner.

This was all accomplished by the Docker container, which slowly replaced virtual machines and cloud instances on public cloud providers.

Since modern cloud companies have hundreds and thousands of microservices running in Docker containers, the need for an orchestration tool to run all of those containers quickly appeared.

In comes Kubernetes, an open-source software developed by Google and Cloud Native Computing Foundation, as the new industry standard, and is now available as a managed service on all major public cloud providers.

Managed K8s clusters offer you auto-upgrade, auto-scaling, out-of-the-box management of IP addresses, and high availability. Also, being a managed service on most public clouds, Kubernetes is already compliant with major compliance standards such as GDPR or PCI-DSS.

If you use GKE, Google Cloud’s managed Kubernetes, Google even offers to scan your containers for security vulnerabilities, free of charge.

All of these management features give you the security of the shelf, so you can focus on your software development needs.

6. Employ IAM best practices

The IAM (Identity & Access Management) layer is a critical aspect of cloud security that focuses on managing and controlling user access to resources and systems and is a core pillar of modern security.

It involves authentication, authorization, and permissions to ensure that the right individuals have the appropriate level of access while protecting against unauthorized access and potential security risks.

Not implementing IAM in your security system can result in breaches like the one that happened at Capital One which came as a consequence of misconfigured IAM access.

Utilizing IAM best practices should be your primary concern when designing your cloud environment.

Follow the least privilege rule when creating and assigning IAM policies, organize users into groups, enable IAM access keys only to groups that need them (and rotate them on a 3-6 month basis) and always enforce 2-factor authentication.

Once all the best practices are in place, deploy them to your AWS account using configuration management tools such as AWS CloudFormation or Terraform instead of manually.

7. Cloud compliance enablers

Besides the usual legal obligations your company must fulfill, compliance standards like the GDPR, PCI-DSS, or HIPAA are forcing cloud companies to adhere to certain sets of requirements.

They are designed to protect customer data in the cloud and not complying with them can lead to major fines and lawsuits.

Depending on the industry, your organization might need to ensure compliance with a certain standard to do any business at all.

Even if that’s not the case, following compliance standards is a great way to make your business sustainable, reputable, and trustworthy, which can help you maintain the trust of your customers in the long run.

Today, public cloud providers offer you numerous tools to automate the compliance processes, easing the life of your security team, by replacing manual controls, checklists, and manual engineering work.

For instance, if you’re using AWS, check the following compliance-related tools: AWS GuardDuty, AWS Artifact, AWS Config, and AWS SecurityHub.

8. Cybersecurity mesh

One of the new trends in cloud security is the rapid adoption of the cybersecurity mesh concept which aims to ensure the security of data and assets on the cloud.

It emphasizes the distributed and interconnected nature of security control instead of relying on a central security perimeter by integrating into various points across a network.

This method creates a web of security controls that dynamically adapt to the modern cloud environment.

This involves creating a distributed network and infrastructure that forms security perimeters around the individual’s connected network.

Implementing cybersecurity mesh allows companies to manage their security policies with central access across the whole cloud.

Summary

Following the latest cloud security trends is a must for modern companies as the future of cloud security continues to evolve.

Let’s recap the key points you should think about:

- Security must be implemented in every phase of the software development lifecycle.

- You shouldn’t rely solely on a virtual private cloud to power your security.

- Security access service edge (SASE) framework is mandatory for big enterprises.

- Nobody should be blindly trusted — Implement the Zero Trust model.

- Organize and run all of your cloud containers with Kubernetes.

- Ensure that the right individuals have the appropriate level of access with IAM.

- Think about compliance in every part of your process.

- Ensure your data’s security by embracing distributed security control.

Use these eight cloud security tips to give yourself an edge in the vast cloud security landscape.

Remember that there’s so much to learn and implement to be secure on all fronts.

For instance, most companies still aren’t implementing cloud-archiving software for their communications hindering their security and compliance efforts.

Archive your internal and external communication with Jatheon’s cloud archiving solution. Keep your business compliant with all communication retention laws and safeguard your data.

Read Next:Network Security Management Best Practices |